Reproduce experiment results¶

DagsHub enables its users to compare and reproduce results of ML experiments with a click of a button. “How?”, you ask? Let's see how it works.

How DagsHub achieves full reproducibility?¶

DagsHub achieves full reproducibility of ML experiments by leveraging the capabilities of Git, DVC, and MLflow. This combination of tools allows for comprehensive tracking and versioning of project components.

With Git and DVC, we can encapsulate the version of all ML project components, including code, data, configurations, annotations, models, etc., under a single Git commit. This commit becomes the single source of truth of our project.

When running the experiments with MLflow, it automatically detects if the code is executed within a Git repository, fetches the current Git commit, and logs it to DagsHub.

The commit is associated under the experiment tab with each run, and traced back with a click of a button, providing the version of all components to unlock full reproducibility.

Additionally, if the model is logged using MLflow, it will be recorded to DagsHub servers and found in the MLflow UI.

Starting point¶

To begin, we assume you have set up DagsHub. We also assume your code is versioned with Git, data versioned using DVC, and model versioned using either DVC or MLflow. Additionally, experiments should be tracked using MLflow. These components form the foundation for reproducibility.

How to choose the best ML experiment?¶

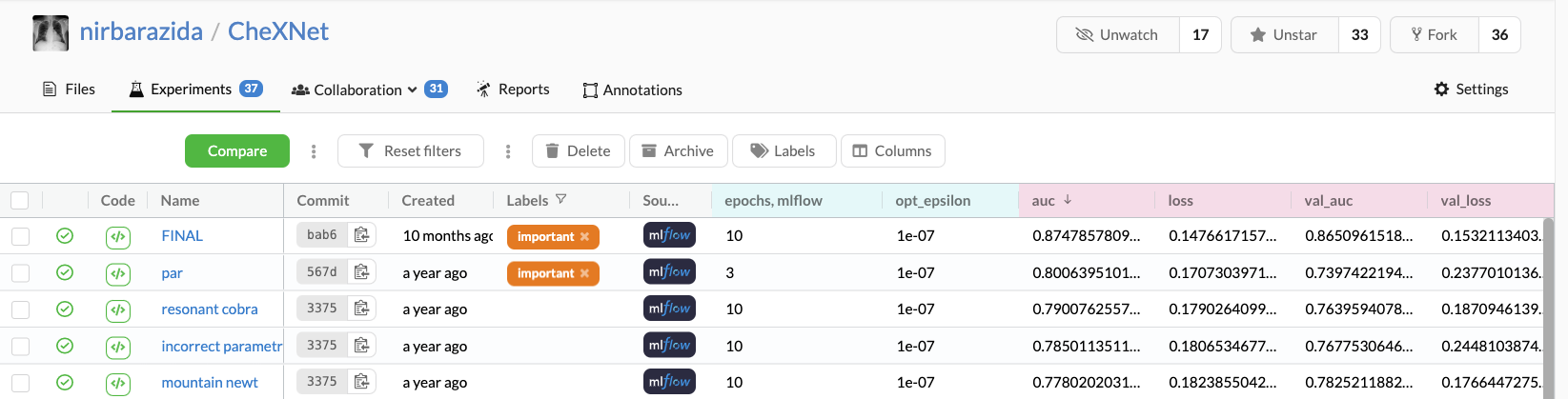

Before we reproduce an experiment, we need to first choose the experiment that provides the best results. To do so, we’ll click on the experiment tab, and sort the table based on our golden metric.

But is it enough to simply choose the first experiment in the sorted table? Real-life projects are not a Kaggle competition. We often try to optimize experiments based on multiple metrics and parameters. For that, we’ll choose the first X experiments, and compare them to make a data-driven dissection.

How to reproduce an ML experiment on DagsHub?¶

To reproduce this experiment, all you need to do is click on the code icon. That’s it! DagsHub will lead you to the Git commit of the experiment’s run with the version of all project components that provided the experiment results

How to reproduce an ML experiment locally?¶

To reproduce the above experiment on a local machine, we will use Git and DVC in the following steps:

- Copy the Git commit hash from DagsHub

- Use Git to retrieve that commit

git checkout <commit-hash> - Use DVC to pull the version of the data and model by running

dvc pull -r <remote-name>

How to retrieve MLflow artifacts from DagsHub remote server?¶

To retrieve a version of artifacts (e.g., model, data) tracked by MLflow, follow these steps:

- Go to the MLflow UI on DagsHub

- Copy the URI pointing to the the artifacts

- Run the following command:

import mlflow

mlflow.artifacts.download_artifacts(artifact_uri="<copied_artifact_uri>")

The artifact_uri can point to:

- a run

"runs:/c82f6add249f4b578241932d5b9bff74/finetuned", or - a model

"models:/SquirrelDetector/5"