Connect External Storage¶

DagsHub supports connecting external storage to DagsHub repositories to access and interact with your data and large files without leaving the DagsHub platform.

This can be used to connect your data storage to Data Engine, so that you can manage and version your datasets in the same place where you manage your code, experiments, and models.

It can also be used to provide your own backing storage for your DVC remote.

What type of storage is supported?¶

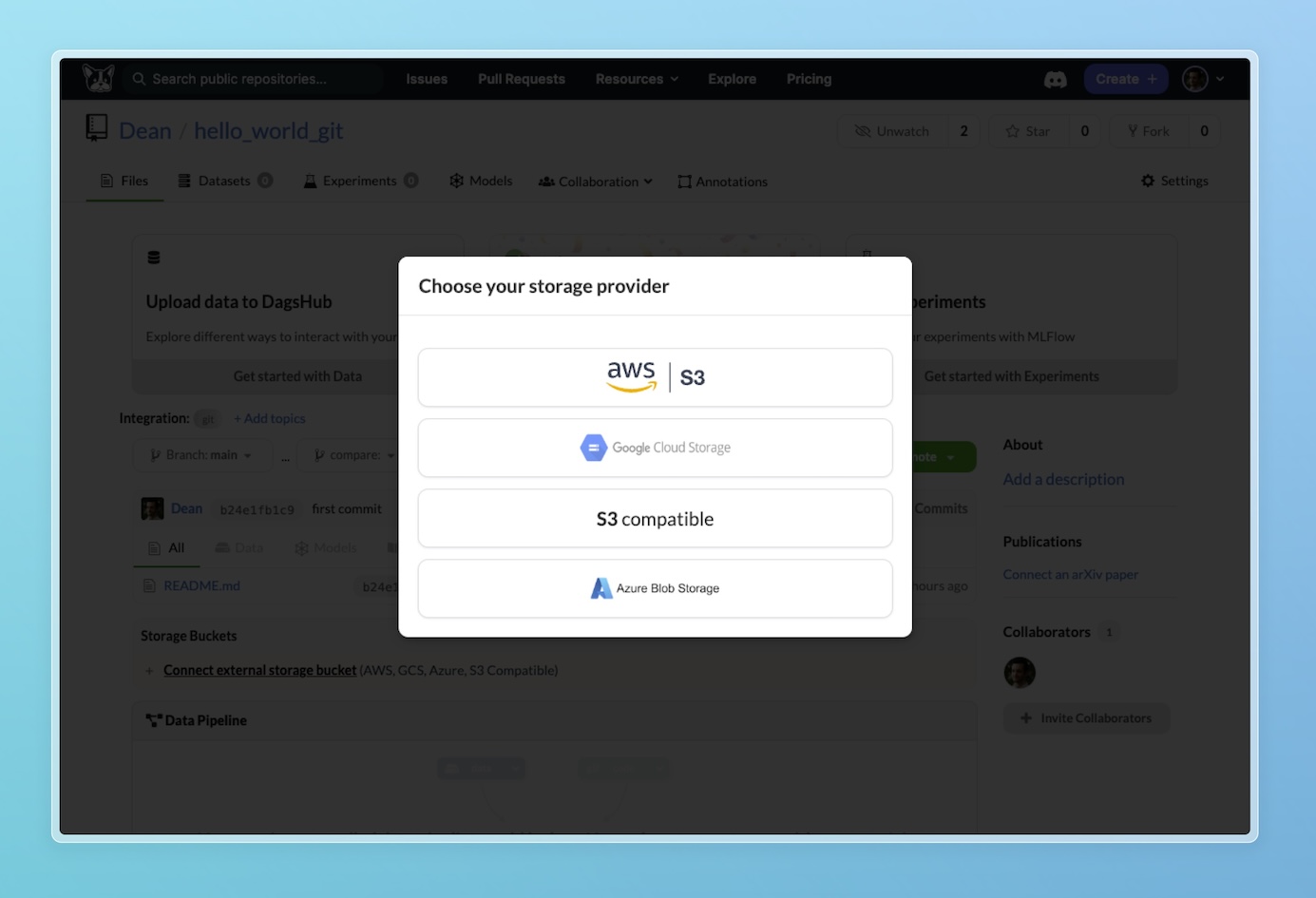

DagsHub provides integration with AWS S3, Google Cloud Storage (GCS), Azure Blob Storage and any S3-compatible storage, including MinIO.

What is S3-compatible storage?

S3-compatible storage refers to a cloud storage system that supports the same API as the Amazon Simple Storage Service (S3). S3 is a widely used object storage service AWS. With S3-compatible storage, alternative cloud providers offer storage solutions that are compatible with the S3 API, allowing users to utilize the same tools and API calls used with Amazon S3.

S3 can store any kind of object, such as: images, videos, audio files, CSV files, JSON files, etc. They are widely used for storing large amounts of data because they offer high availability, durability, scalability, and security. They also support various features such as encryption, lifecycle management, versioning, and access control.

How to connect an S3 bucket to a DagsHub repository?¶

This guide will walk you through connecting your bucket to DagsHub.

It assumes you already have created your bucket set up with the correct permissions.

In the example we'll use AWS S3, but other options work similarly, simply choose the relevant provider from the list.

Connection flow external buckets¶

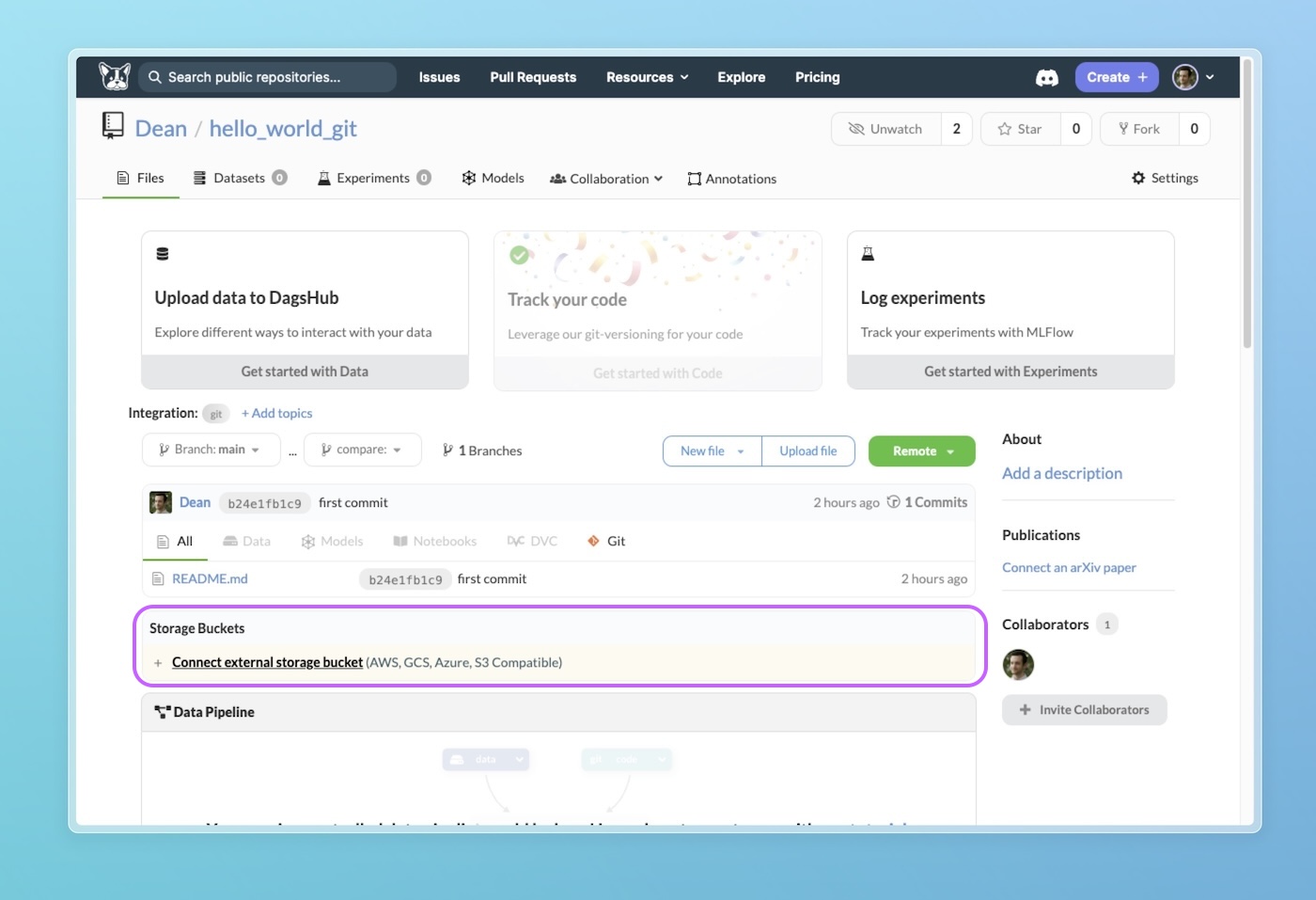

If you've already added code or connected your Git repo to your project, simply click the "Connect external storage bucket" button, and select the relevant storage you want to connect. Otherwise, if you project is entirely empty, you can go to the settings tab, and select the Integrations sub-section, where you'll see all available integrations. Some integrations also appear on the repo empty state.

Follow the connection wizard and add the relevant details.

Note

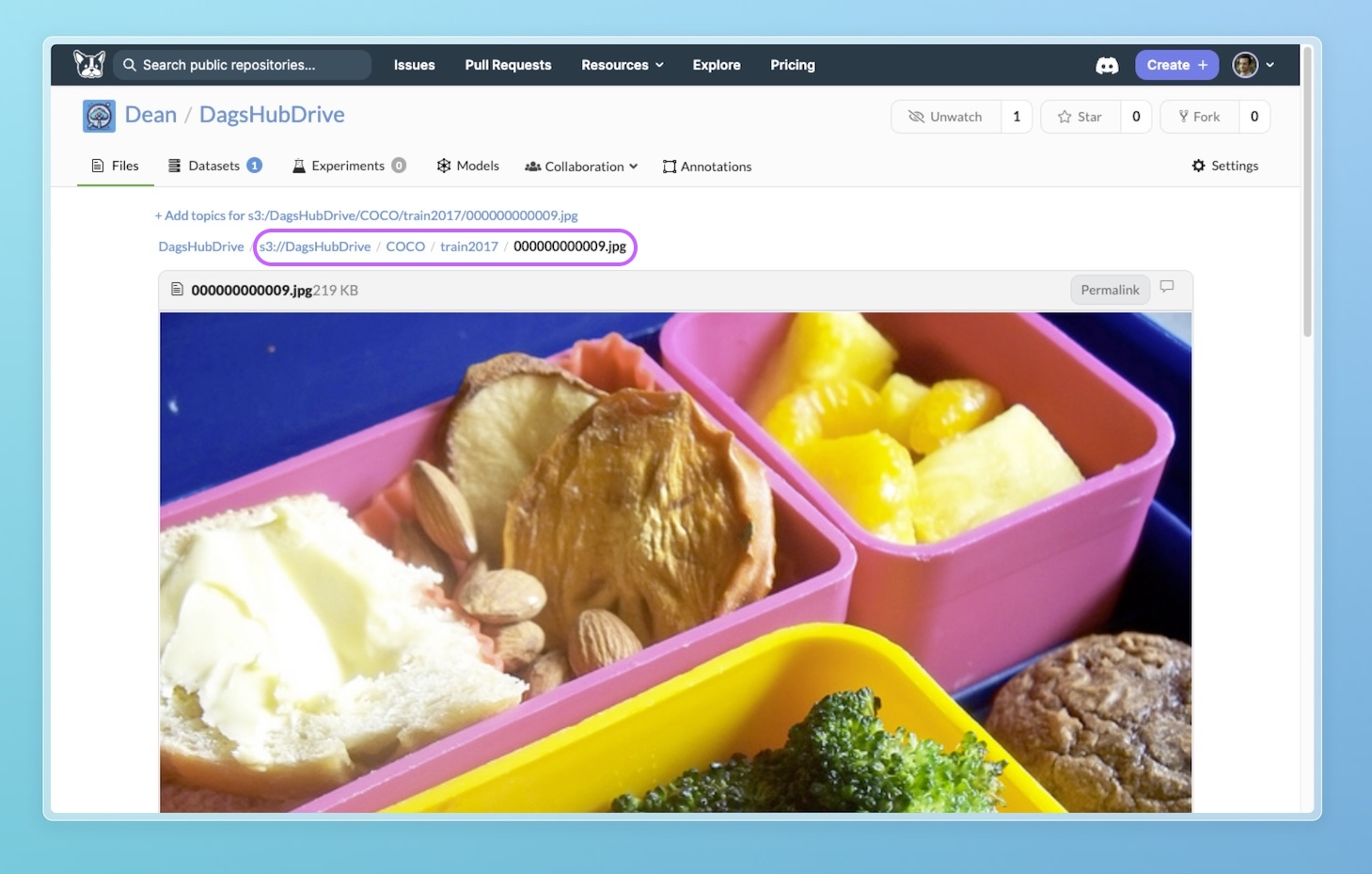

Your bucket URL should include the relevant prefix s3://, gs://, azure://, and s3:// for S3-compatible storage.

Accessing and viewing your connected storage bucket¶

Once you have connected your bucket you'll see the bucket appear in the "Storage Buckets" section of your files tab. You'll be able to see its contents, download files, and create a Data Engine Datasource from it for convenient annotation and training.

How to stream files hosted on an S3 bucket?¶

Data Streaming supports streaming files from your buckets connected to a DagsHub repository. This means you can access the files as if they were in your local system. We'll download them on demand.

Here's some example code for an S3 bucket:

from dagshub.streaming import DagsHubFilesystem

fs = DagsHubFilesystem(".", repo_url="https://dagshub.com/<user_name>/<repo_name>")

fs.listdir("s3://good-dog-pics/10plus")

It also works with the magical install_hooks() functionality:

import os

from dagshub.streaming import install_hooks

install_hooks()

os.listdir("s3://good-dog-pics/10plus")