Data Science Peer Review¶

Peer review is an essential aspect of any scientific process or engineering project, as it assesses the work to ensure it meets quality, accuracy, and consistency standards. Peers can identify errors, provide constructive feedback, and validate findings. This iterative feedback loop strengthens methodologies, builds trust in the results, and a knowledge base to rely on in the future.

When it comes to machine learning projects, conducting peer review becomes a challenging task. The components are spread over multiple platforms (Git server, storage, experiment server, annotation tool, etc.), making it challenging to gather and review all relevant information to understand the full picture. Additionally, communication over these components often requires using third-party platforms (Slack, Discord, etc.) and sharing screenshots of files, making it difficult to track decision-making conversations.

This often results in one of three outcomes: losing valuable information, investing time in documenting our work, or simply skipping the peer review process. Each of these consequences significantly impacts the project's quality, collaboration, and overall success.

To ensure data scientists and machine learning practitioners can thoroughly review each others' work, DagsHub includes several features to help streamline this process.

Viewing all project components¶

DagsHub provides a centralized platform to host all machine learning project components in one place. This includes the Git repository, data storage, experiment server, annotation tools, and more. By consolidating these elements within DagsHub, technical colleagues and not-so-technical stakeholders can easily access and review all relevant information in a cohesive and organized manner providing the project's full picture.

Each of the following components are available and browsable on DagsHub and sharable using a URL:

- Code

- Data - view images, listen to audio and see their spectrogram, etc.

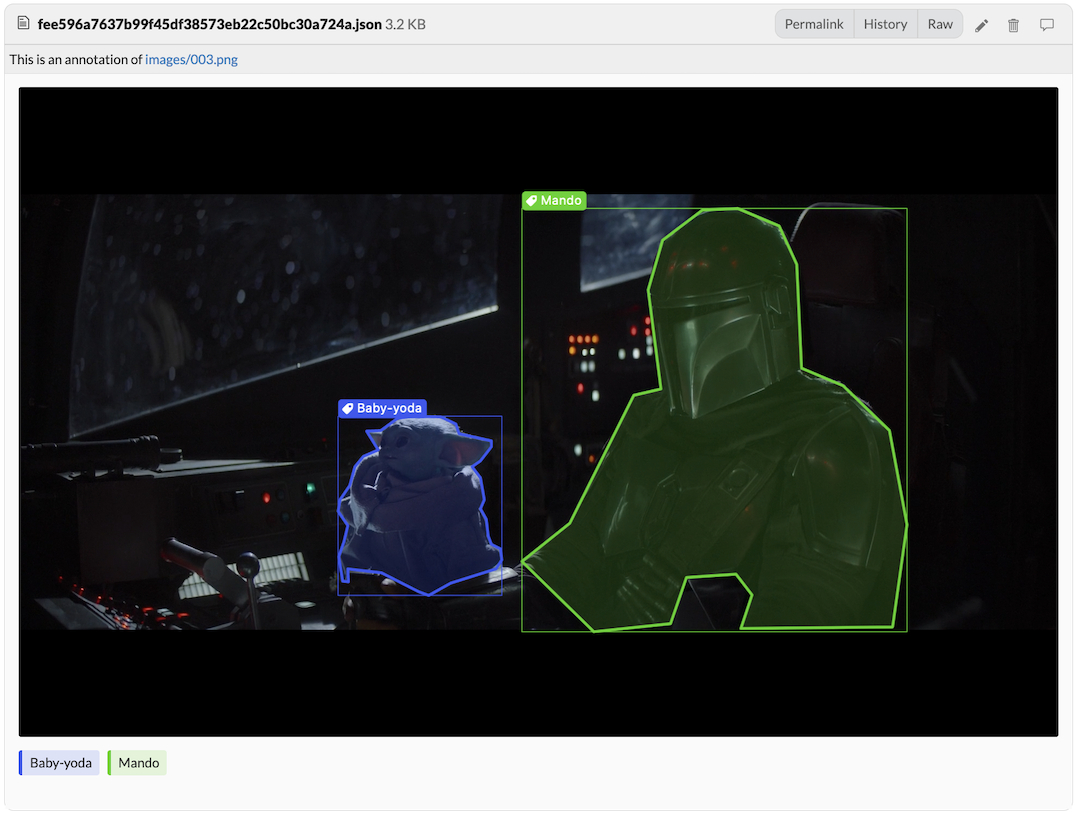

- Annotations - visualize labels and annotations directly on the data

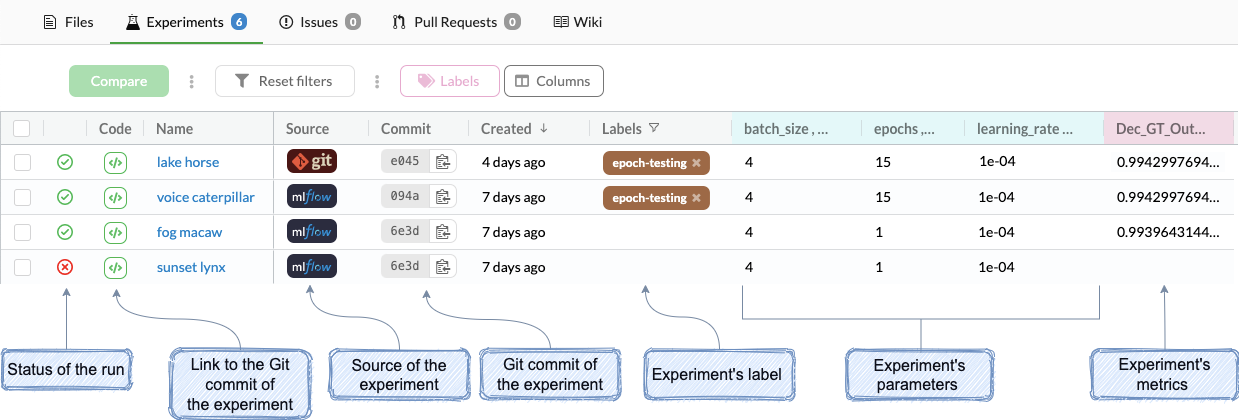

- Experiments - see all metrics of experiments in one spot

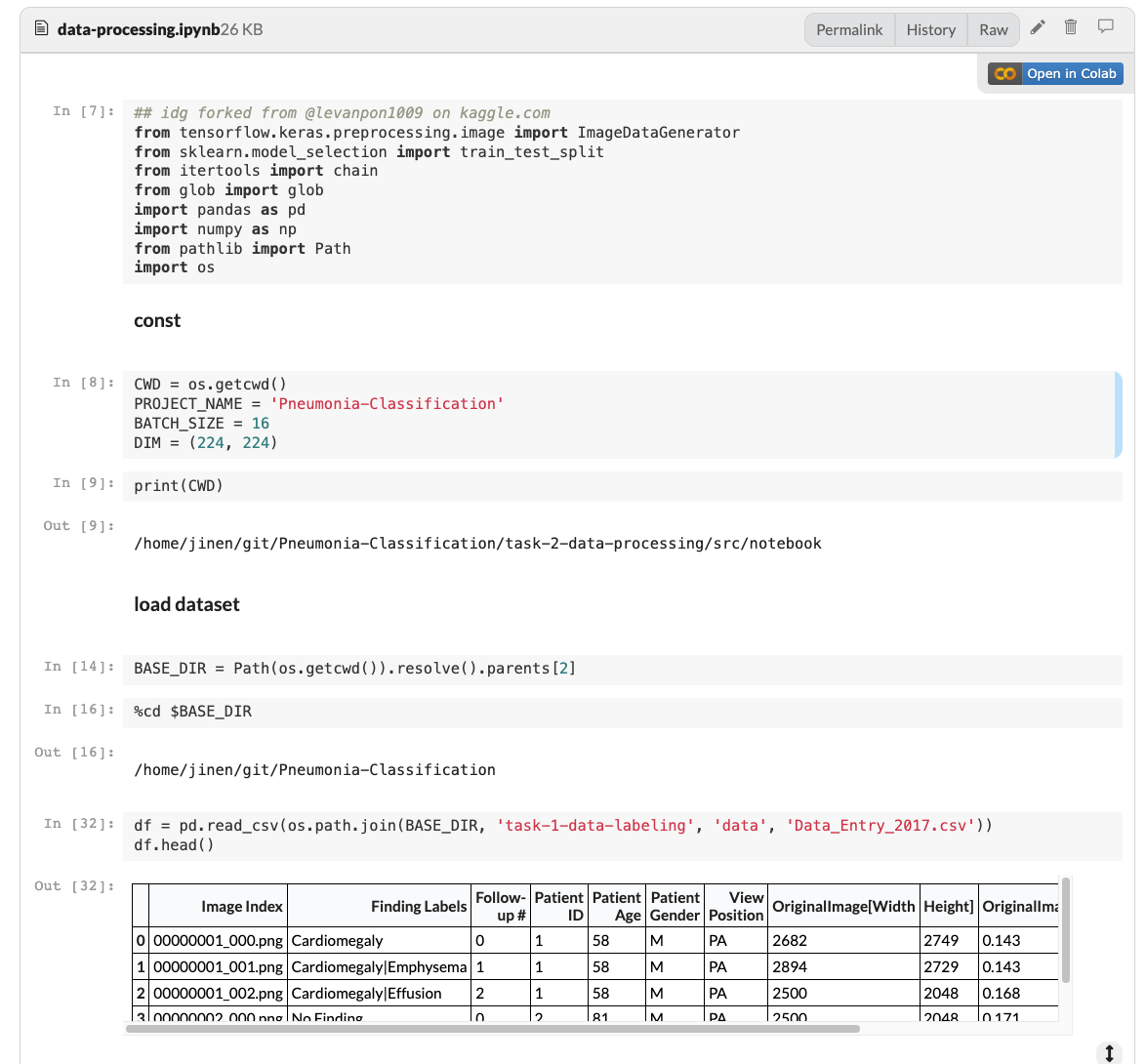

- Notebooks - view on DagsHab and open in Colab

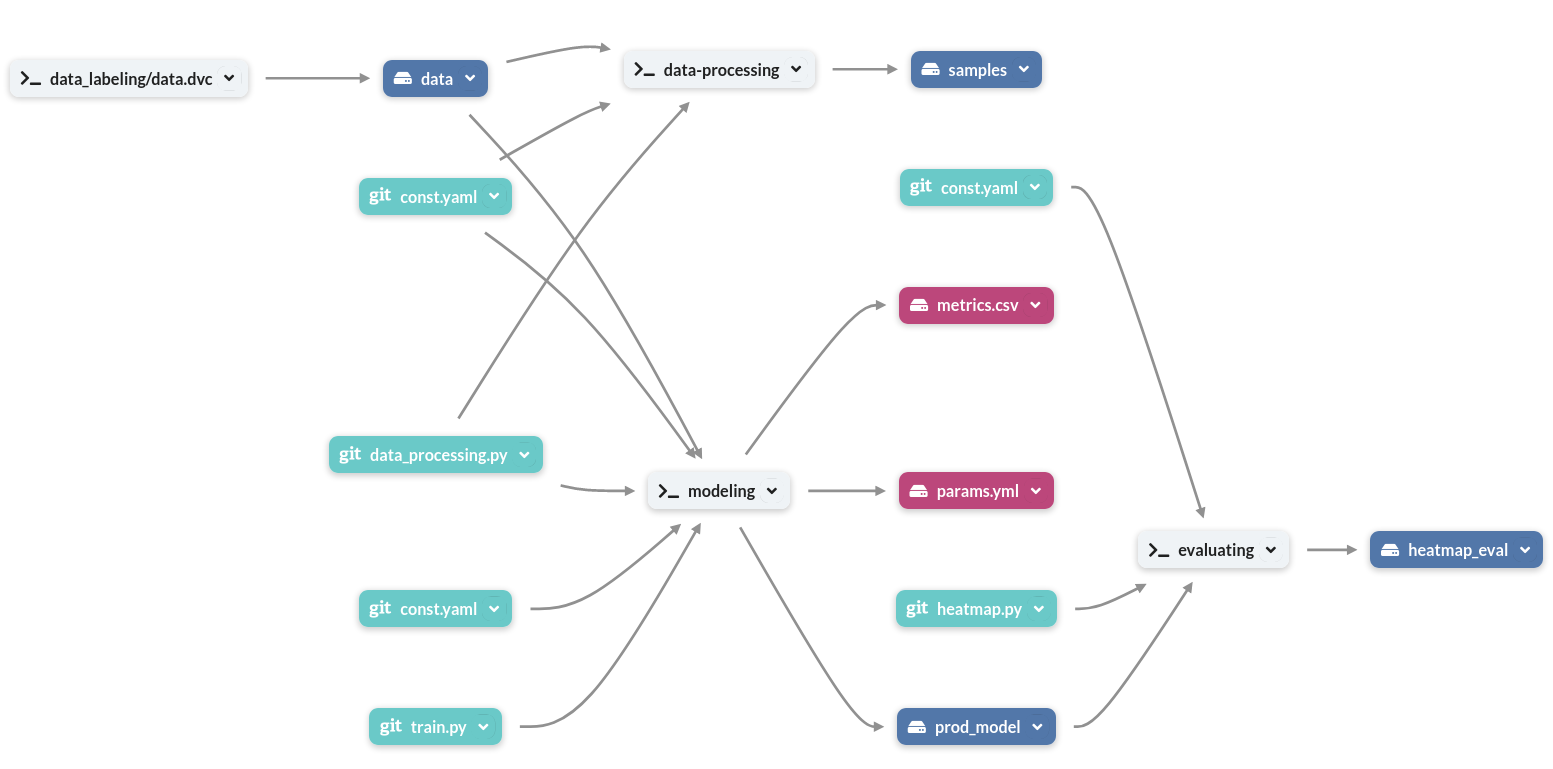

- Pipelines - DAG representation of the project

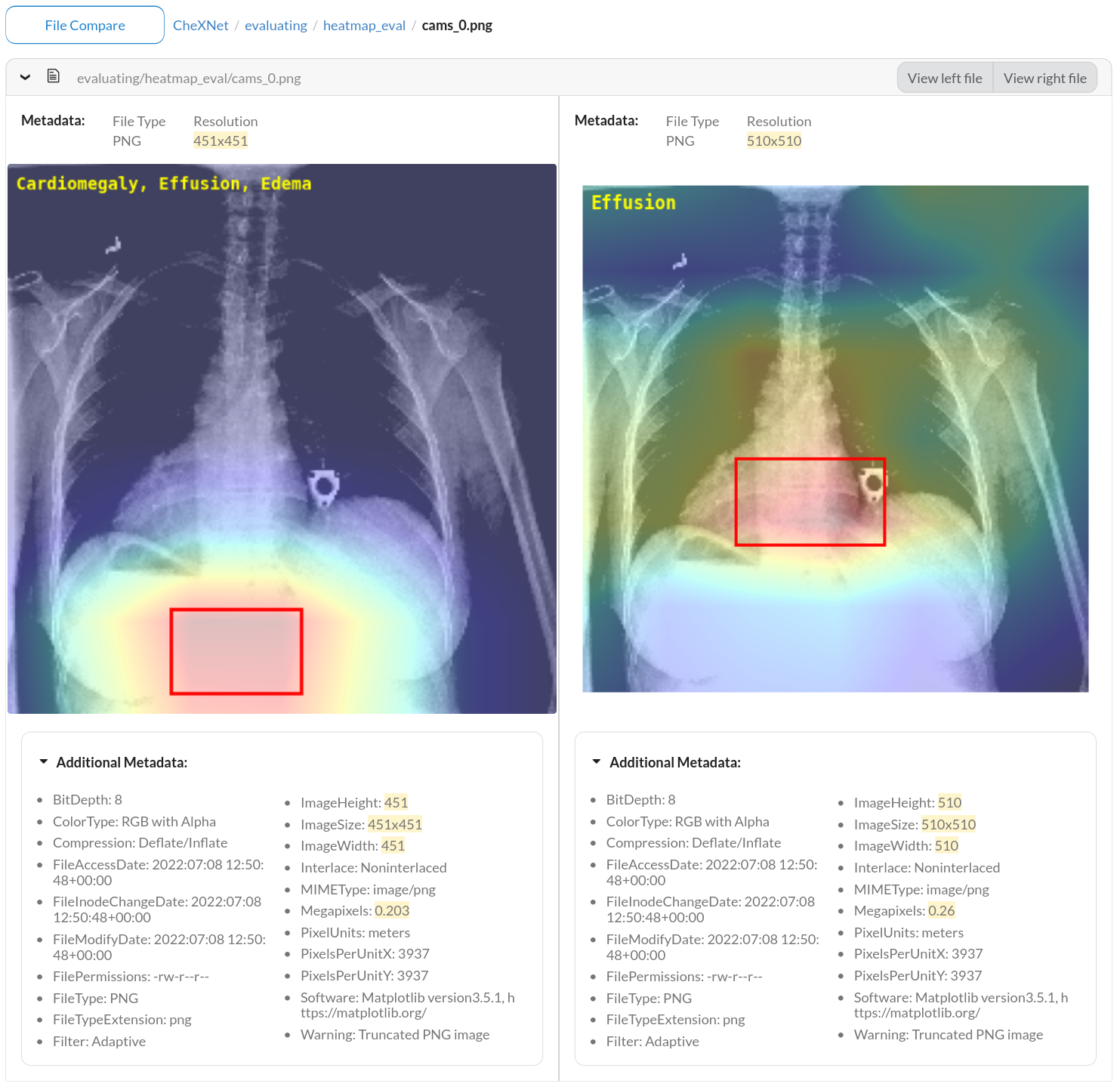

Diffing data, code, and annotations¶

In addition to browsing, DagsHub also supports diffing code, notebooks, CSVs, annotations, and directories. This allows a reviewer to see everything that has changed, which could influence a model's training or performance.

For more information on how to diff and what diffing looks like for each of these types, check out our full documentation on diffing.

Communication via comments¶

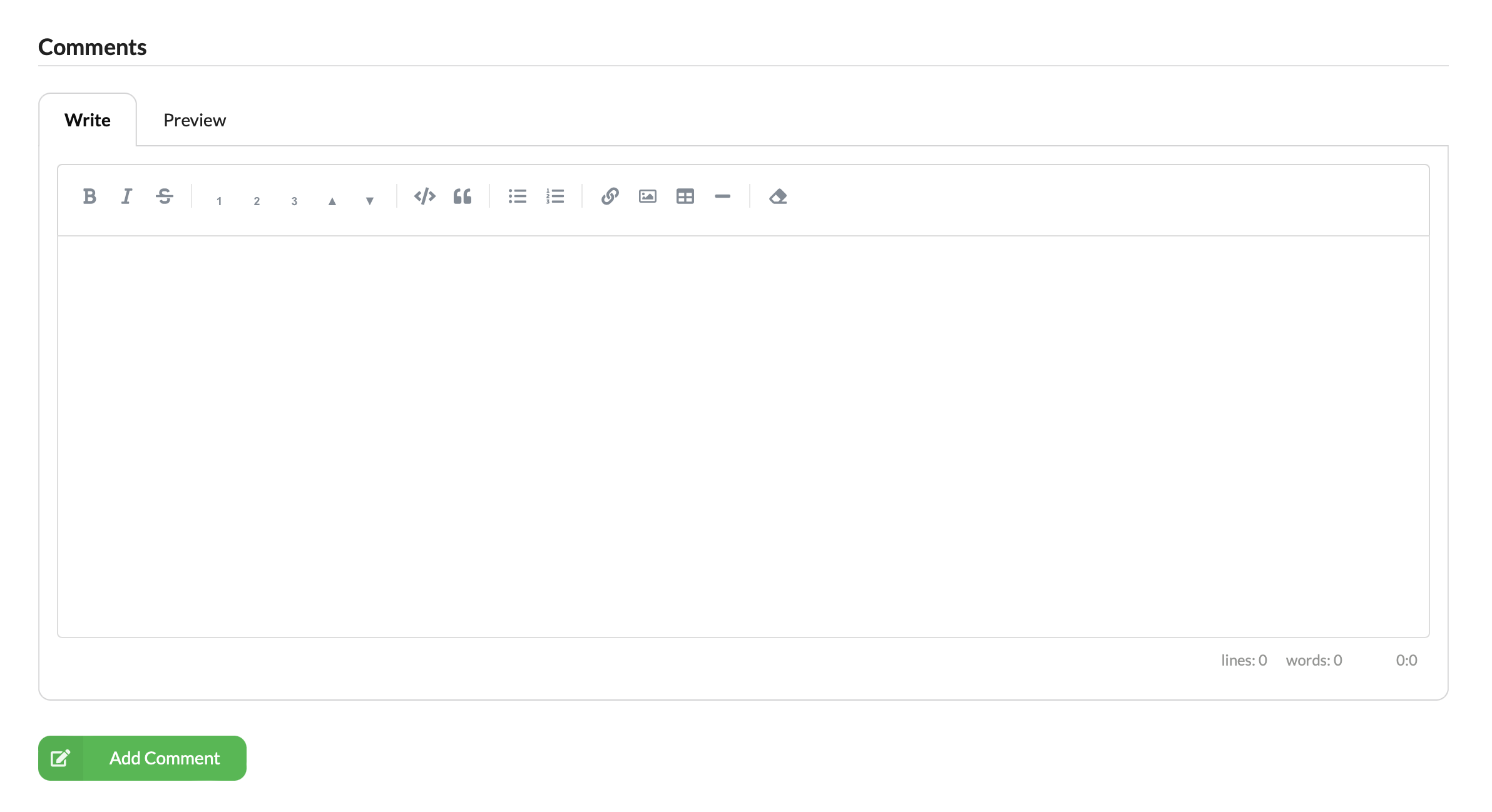

Communication between reviewers, researchers, engineers, and managers is the key to a successful project. To facilitate communications, DagsHub Discussions allows anyone to comment on a file (code, data, model, annotation, etc.), directory, or diff. Through comments, a reviewer can make suggestions to the repo author or start constructive discussions with the whole team.

To comment on a particular file or directory, navigate to it and then scroll to the bottom of the page. Here you should find a Comment box.

In addition to commenting on files or directories, DagsHub all supports comments on:

- Lines of code

- Notebook cells

- Image bounding boxes

- File diffs

These fine-grained commenting options allow reviewers to be more precise and clear with their review. For more information, see our full documention.

Compare experiments during development¶

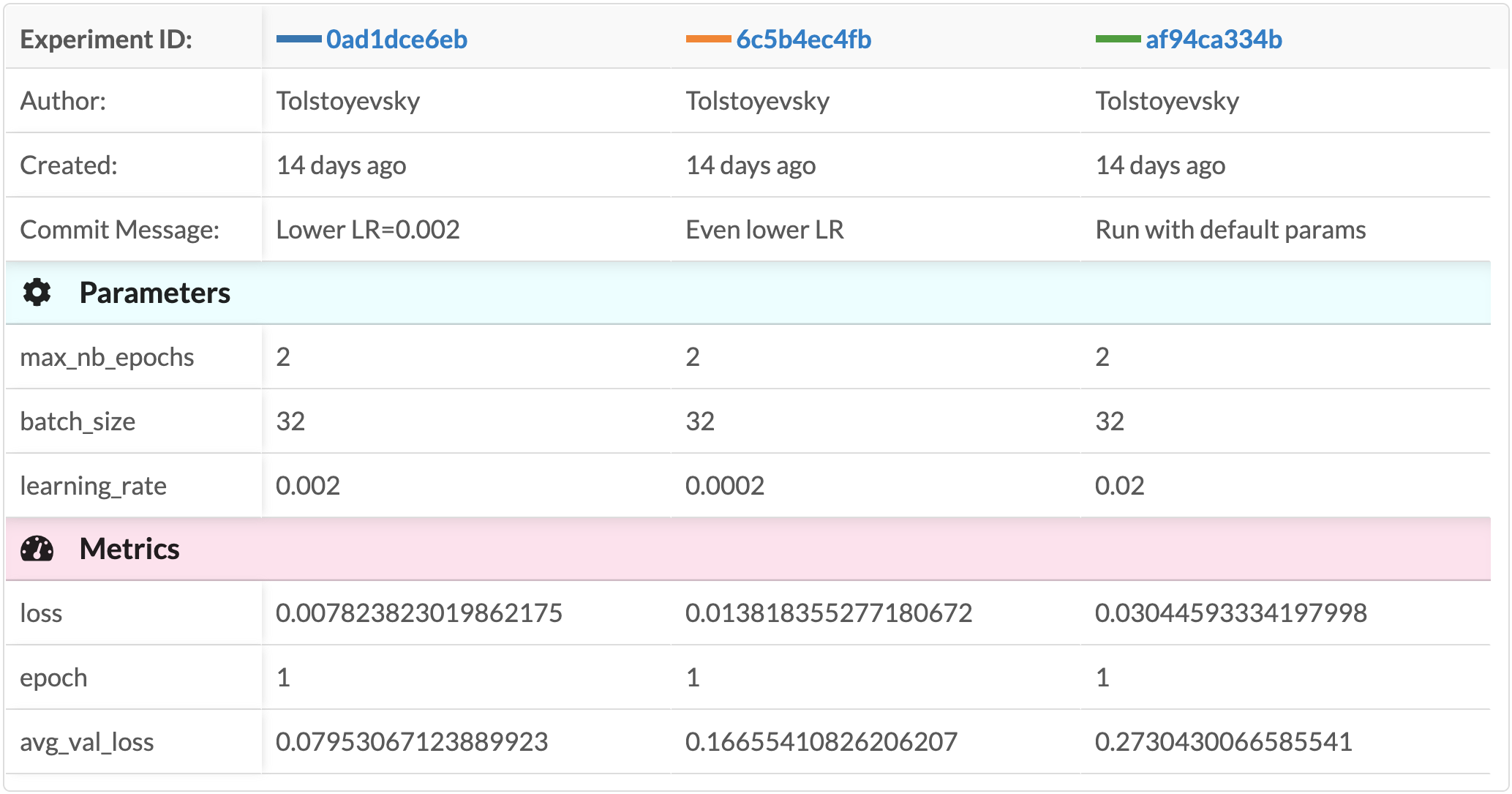

Through DagsHub's integration with MLflow, users can log metrics important to a project through various training, validation, and testing phases. Through the Experiments tab, reviewers can also compare experiments and through a single click jump directly to the version of the project used to generate those results.