DagsHub Storage¶

DagsHub automatically configures a remote object storage for every repository with 10 GB of free space. Everyone can use it without having a degree in DevOps and a billing account in a cloud provider. The storage can be used as a general purpose storage bucket, or you can utilize DVC to get more advanced versioning capabilities.

Connect External Storage Buckets

You can also get all the benefits of DagsHub Storage with your own storage bucket. To learn how to do that, go the guide for connecting external storage.

What you get with DagsHub Storage¶

Every repository is provided with two places you can store the data your project needs:

- An S3 compatible storage bucket

- A DVC remote

You can use your access token to interact with either of them, and access control is based on the access control of your repository. Meaning that only repository writers can change the data, and if your repository is private, then you're in control of who can look at and read the files.

Both of the storages are explorable through the web interface of the repository and through the Content API.

DVC data will be shown along with git repository files, whenever we find any dvc pointer files (.dvc) that were pushed to git, and the bucket is explorable through "DagsHub Storage" entry in the "Storage Buckets" section at the homepage of your repository.

The DVC remote and the bucket are separate from each other

That means that the files you pushed to the DVC remote won't show up in the storage bucket, and same for the opposite.

Working with the S3 compatible storage bucket¶

Python code¶

The DagsHub Python client has a function that can generate clients for the following S3 libraries:

- Boto3 (returns a boto3.client object) – Read the Boto3 docs

- S3Fs – Read the S3Fs docs

To get a client add the following code, then use the client according to the documentation of the library:

Note

Most functions in the libraries ask for the name of the bucket as an argument. In those cases, use the name of the repository as the bucket name.

from dagshub import get_repo_bucket_client

boto_client = get_repo_bucket_client("<user>/<repo>", flavor="boto")

# Upload file

boto_client.upload_file(

Bucket="<repo>", # name of the repo

Filename="local.csv", # local path of file to upload

Key="remote.csv", # remote path where to upload the file

)

# Download file

boto_client.download_file(

Bucket="<repo>", # name of the repo

Key="remote.csv", # remote path from where to download the file

Filename="local.csv", # local path where to download the file

)

from dagshub import get_repo_bucket_client

s3fs_client = get_repo_bucket_client("<user>/<repo>", flavor="s3fs")

# Read from file

with s3fs_client.open("<repo>/remote.csv", "rb") as f:

print(f.read())

# Write to file

with s3fs_client.open("<repo>/remote.csv", "wb") as f:

f.write(b"Content")

# Upload file (can also upload directories)

s3fs_client.put(

"local.csv", # local path of file/dir to upload

"<repo>/remote.csv" # remote path where to upload the file

)

RClone¶

RClone is a very convenient CLI tool that allows you to synchronize data between different storages, be they local, FTP or object storages.

To add the DagsHub storage bucket to RClone as a remote:

- Run

rclone configin your terminal - Type

nto add a new remote - Choose

s3as the storage type - Choose

Other(last) as the provider - Choose

Enter AWS credentials in the next step.to enter the credentials manually - Input your DagsHub token as both the Access Key ID and Secret Access Key

- Leave the region empty

- Set the

endpointtohttps://dagshub.com/api/v1/repo-buckets/s3/<user>(where user is the owner of the repository) - Leave all the other steps empty, until you complete the setup.

The resulting config from RClone should look like this:

Configuration complete.

Options:

- type: s3

- provider: Other

- access_key_id: <token>

- secret_access_key: <token>

- endpoint: https://dagshub.com/api/v1/repo-buckets/s3/<user>

After the setup is done you can start using RClone with the bucket!

Here's an example of how you can copy a local folder to the bucket (assuming the name of the remote in RClone is dagshub):

rclone sync <local_path_to_folder> dagshub:<repo_name>/<remote_path_to_folder>

Mounting the bucket as a filesystem [Linux only]¶

If your operating system supports FUSE, you can use s3fs-fuse to mount any S3 compatible bucket as a local filesystem. This includes DagsHub storage! After you mount a bucket you can interact with it in your file explorer.

Here's how you can mount the dagshub bucket at /media/dagshub (make sure that the path exists and you have access to it):

AWS_ACCESS_KEY_ID=<token> AWS_SECRET_ACCESS_KEY=<token> s3fs <repo_name> /media/dagshub -o url=https://dagshub.com/api/v1/repo-buckets/s3/<user> -o use_path_request_style

The use_path_request_style is required for s3fs to function with our bucket. If you need to launch it in non-background mode add the -f flag in the end

Working with the DVC remote¶

Configuring DVC¶

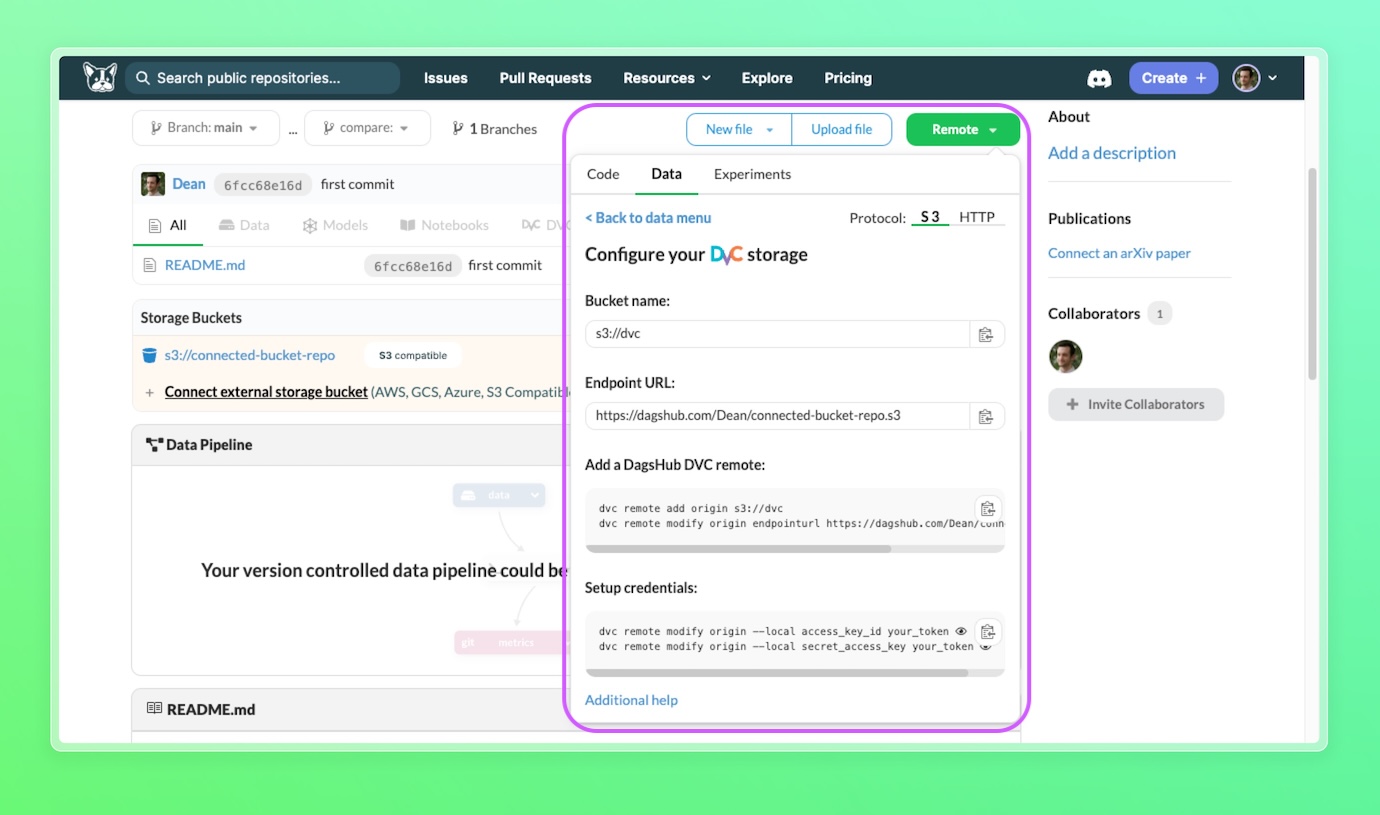

- Go to your repository homepage, click on the green "Remote" button in the top right, go to the Data section and choose DVC there.

- Copy the commands to set up your local machine with DagsHub Storage

-

Enter a terminal in your project, paste the commands and run them (the following commands are an example, and the actual commands are the ones you should copy from the Remote dropdown):

dvc remote add origin s3://dvc dvc remote modify origin endpointurl https://dagshub.com/<DagsHub-user-name>/hello-world.s3 dvc remote modify origin --local access_key_id <Token> dvc remote modify origin --local secret_access_key <Token>Why --local?

Everything you configure without

--localwill end up in the.dvc/configfile, which is tracked by git, and appear in your repository. Personal info like authentication details should always be kept local.

Note: You need to be inside a Git and DVC directory for this process to succeed. To learn how to do that, please follow the first part of the Get Started section.

Pull data¶

dvc pull -r origin

Push data¶

dvc push -r origin

Miscellaneous¶

S3 Compatible Bucket credentials¶

In case you have a usecase not covered in the "Working with the S3 compatible storage bucket" section, here are the credentials you need to connect to the bucket:

- Bucket name:

<name of the repo> - Endpoint URL:

https://dagshub.com/api/v1/repo-buckets/s3/<username> - Access Key ID:

<Token> - Secret Access Key:

<Token>

The region is irrelevant. If your library/program requires a region, put in the AWS default us-east-1

Endpoints supported by the S3 Compatible Bucket¶

Objects:

Multipart uploads: