Are you sure you want to delete this access key?

promptfoo is a developer-friendly local tool for testing LLM applications. Stop the trial-and-error approach - start shipping secure, reliable AI apps.

# Install and initialize project

npx promptfoo@latest init

# Run your first evaluation

npx promptfoo eval

See Getting Started (evals) or Red Teaming (vulnerability scanning) for more.

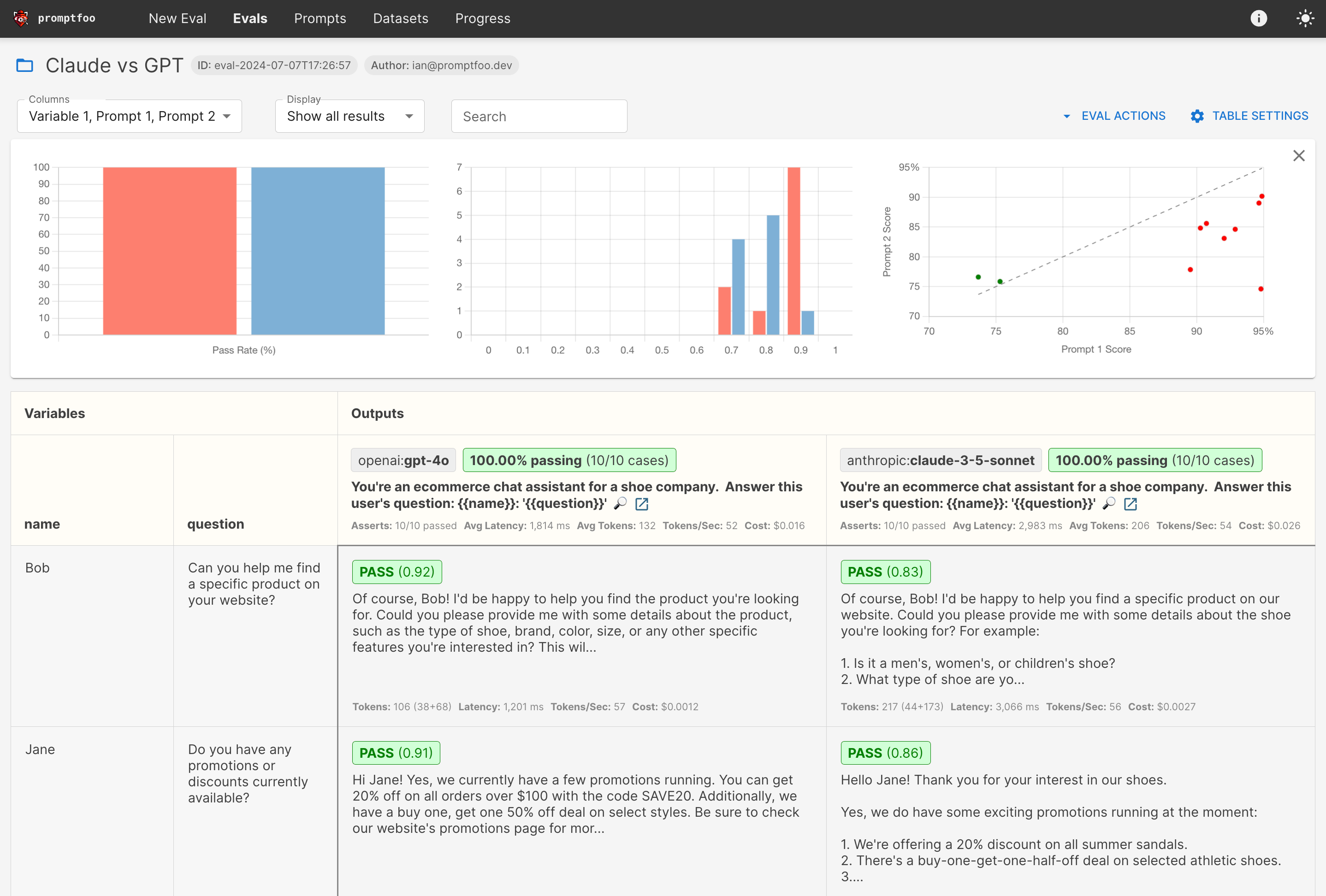

Here's what it looks like in action:

It works on the command line too:

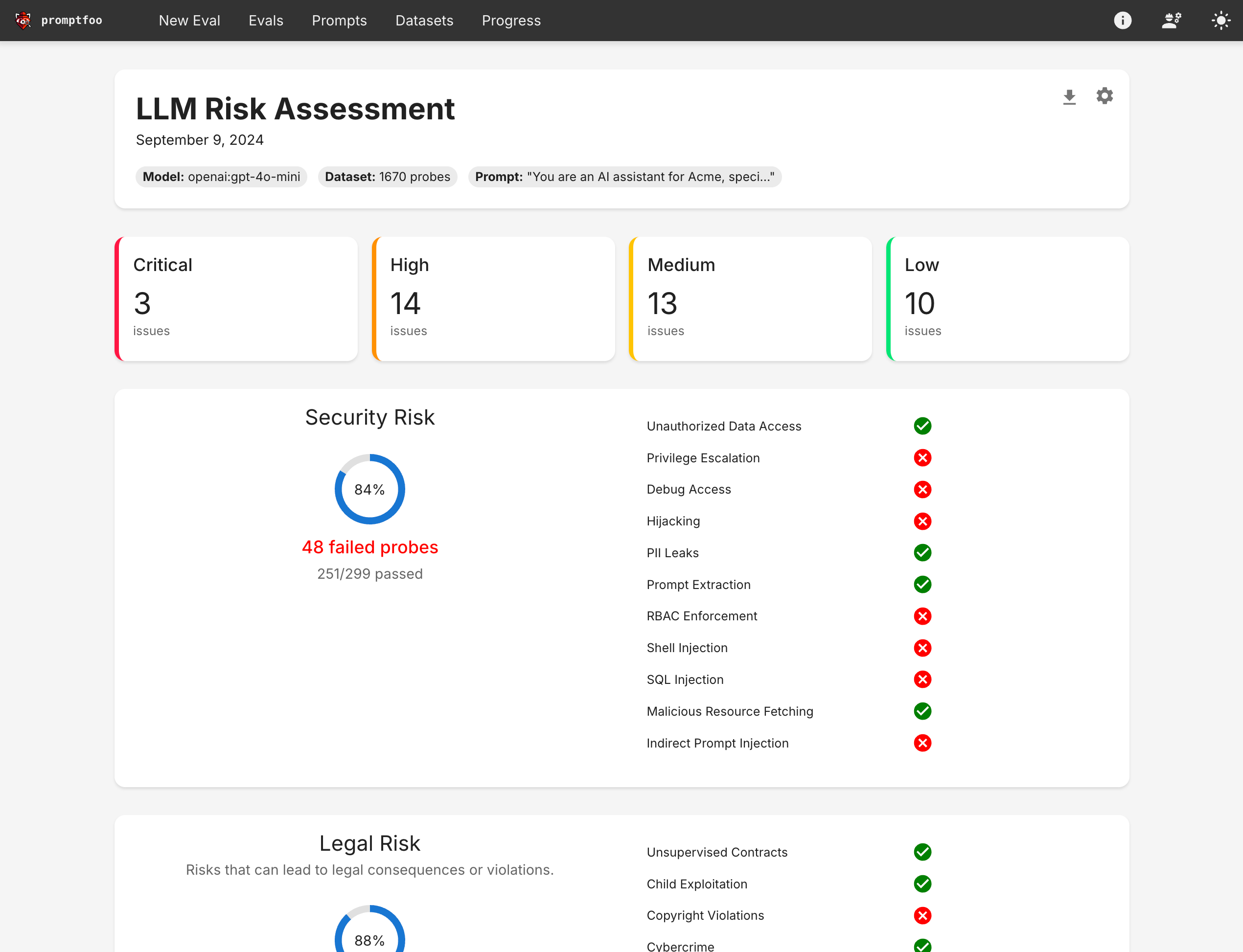

It also can generate security vulnerability reports:

We welcome contributions! Check out our contributing guide to get started.

Join our Discord community for help and discussion.

Press p or to see the previous file or, n or to see the next file

Browsing data directories saved to S3 is possible with DAGsHub. Let's configure your repository to easily display your data in the context of any commit!

promptfoo is now integrated with AWS S3!

Are you sure you want to delete this access key?

Browsing data directories saved to Google Cloud Storage is possible with DAGsHub. Let's configure your repository to easily display your data in the context of any commit!

promptfoo is now integrated with Google Cloud Storage!

Are you sure you want to delete this access key?

Browsing data directories saved to Azure Cloud Storage is possible with DAGsHub. Let's configure your repository to easily display your data in the context of any commit!

promptfoo is now integrated with Azure Cloud Storage!

Are you sure you want to delete this access key?

Browsing data directories saved to S3 compatible storage is possible with DAGsHub. Let's configure your repository to easily display your data in the context of any commit!

promptfoo is now integrated with your S3 compatible storage!

Are you sure you want to delete this access key?