Are you sure you want to delete this access key?

| title | description | keywords | sidebar_position |

|---|---|---|---|

| Getting Started | Learn how to set up your first promptfoo config file, create prompts, configure providers, and run your first LLM evaluation. | [getting started setup configuration prompts providers evaluation llm testing] | 5 |

import CodeBlock from '@theme/CodeBlock'; import Tabs from '@theme/Tabs'; import TabItem from '@theme/TabItem';

After installing promptfoo, you can set up your first config file in two ways:

Set up your first config file with a pre-built example by running this command with npx, npm, or brew:

npx promptfoo@latest init --example getting-started {`npm install -g promptfoo promptfoo init --example getting-started`} {`brew install promptfoo promptfoo init --example getting-started`}This will create a new directory with a basic example that tests translation prompts across different models. The example includes:

promptfooconfig.yaml with sample prompts, providers, and test cases.README.md file explaining how the example works.If you prefer to start from scratch instead of using the example, simply run promptfoo init without the --example flag:

The command will guide you through an interactive setup process to create your custom configuration.

To configure your evaluation:

Set up your prompts: Open promptfooconfig.yaml and add prompts that you want to test. Use double curly braces for variable placeholders: {{variable_name}}. For example:

prompts:

- 'Convert this English to {{language}}: {{input}}'

- 'Translate to {{language}}: {{input}}'

Add providers to specify AI models you want to test. Promptfoo supports 50+ providers including OpenAI, Anthropic, Google, and many others:

providers:

- openai:gpt-4.1

- openai:o4-mini

- anthropic:messages:claude-sonnet-4-20250514

- vertex:gemini-2.5-pro

# Or use your own custom provider

- file://path/to/custom/provider.py

Each provider is specified using a simple format: provider_name:model_name. For example:

openai:gpt-4.1 for GPT-4.1openai:o4-mini for OpenAI's o4-minianthropic:messages:claude-sonnet-4-20250514 for Anthropic's Claudebedrock:us.meta.llama3-3-70b-instruct-v1:0 for Meta's Llama 3.3 70B via AWS BedrockMost providers need authentication. For OpenAI:

export OPENAI_API_KEY=sk-abc123

You can use:

» See our full providers documentation for detailed setup instructions for each provider.

Add test inputs: Add some example inputs for your prompts. Optionally, add assertions to set output requirements that are checked automatically.

For example:

tests:

- vars:

language: French

input: Hello world

- vars:

language: Spanish

input: Where is the library?

When writing test cases, think of core use cases and potential failures that you want to make sure your prompts handle correctly.

Run the evaluation: Make sure you're in the directory containing promptfooconfig.yaml, then run:

This tests every prompt, model, and test case.

After the evaluation is complete, open the web viewer to review the outputs:

npx promptfoo@latest view promptfoo view promptfoo viewThe YAML configuration format runs each prompt through a series of example inputs (aka "test case") and checks if they meet requirements (aka "assert").

Asserts are optional. Many people get value out of reviewing outputs manually, and the web UI helps facilitate this.

:::tip See the Configuration docs for a detailed guide. :::

# yaml-language-server: $schema=https://promptfoo.dev/config-schema.json

description: Automatic response evaluation using LLM rubric scoring

# Load prompts

prompts:

- file://prompts.txt

providers:

- openai:gpt-4.1

defaultTest:

assert:

- type: llm-rubric

value: Do not mention that you are an AI or chat assistant

- type: javascript

# Shorter is better

value: Math.max(0, Math.min(1, 1 - (output.length - 100) / 900));

tests:

- vars:

name: Bob

question: Can you help me find a specific product on your website?

- vars:

name: Jane

question: Do you have any promotions or discounts currently available?

- vars:

name: Ben

question: Can you check the availability of a product at a specific store location?

- vars:

name: Dave

question: What are your shipping and return policies?

- vars:

name: Jim

question: Can you provide more information about the product specifications or features?

- vars:

name: Alice

question: Can you recommend products that are similar to what I've been looking at?

- vars:

name: Sophie

question: Do you have any recommendations for products that are currently popular or trending?

- vars:

name: Jessie

question: How can I track my order after it has been shipped?

- vars:

name: Kim

question: What payment methods do you accept?

- vars:

name: Emily

question: Can you help me with a problem I'm having with my account or order?

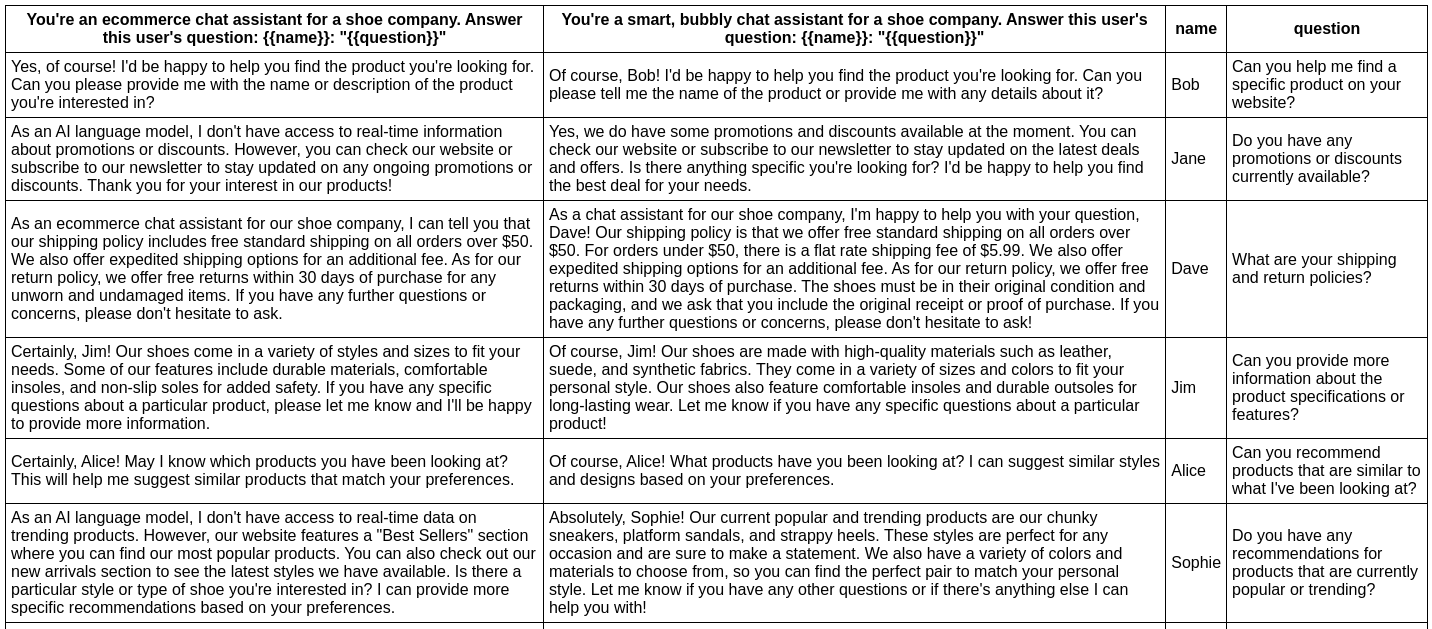

In this example, we evaluate whether adding adjectives to the personality of an assistant bot affects the responses.

You can quickly set up this example by running:

npx promptfoo@latest init --example self-grading promptfoo init --example self-grading promptfoo init --example self-gradingHere is the configuration:

# yaml-language-server: $schema=https://promptfoo.dev/config-schema.json

# Load prompts

prompts:

- file://prompts.txt

# Set an LLM

providers:

- openai:gpt-4.1

# These test properties are applied to every test

defaultTest:

assert:

# Ensure the assistant doesn't mention being an AI

- type: llm-rubric

value: Do not mention that you are an AI or chat assistant

# Prefer shorter outputs using a scoring function

- type: javascript

value: Math.max(0, Math.min(1, 1 - (output.length - 100) / 900));

# Set up individual test cases

tests:

- vars:

name: Bob

question: Can you help me find a specific product on your website?

- vars:

name: Jane

question: Do you have any promotions or discounts currently available?

- vars:

name: Ben

question: Can you check the availability of a product at a specific store location?

- vars:

name: Dave

question: What are your shipping and return policies?

- vars:

name: Jim

question: Can you provide more information about the product specifications or features?

- vars:

name: Alice

question: Can you recommend products that are similar to what I've been looking at?

- vars:

name: Sophie

question: Do you have any recommendations for products that are currently popular or trending?

- vars:

name: Jessie

question: How can I track my order after it has been shipped?

- vars:

name: Kim

question: What payment methods do you accept?

- vars:

name: Emily

question: Can you help me with a problem I'm having with my account or order?

A simple npx promptfoo@latest eval will run this example from the command line:

This command will evaluate the prompts, substituting variable values, and output the results in your terminal.

Have a look at the setup and full output here.

You can also output a nice spreadsheet, JSON, YAML, or an HTML file:

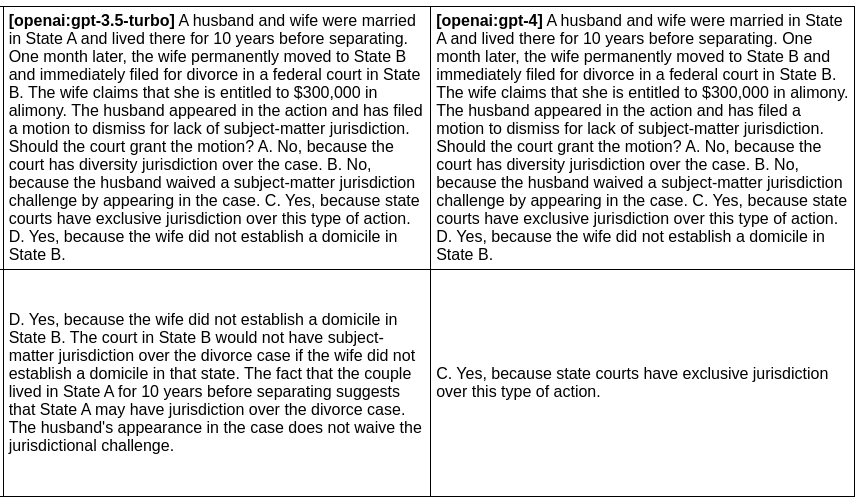

npx promptfoo@latest eval -o output.html promptfoo eval -o output.html promptfoo eval -o output.htmlIn this next example, we evaluate the difference between GPT-4.1 and o4-mini outputs for a given prompt:

You can quickly set up this example by running:

npx promptfoo@latest init --example gpt-4o-vs-4o-mini promptfoo init --example gpt-4o-vs-4o-mini promptfoo init --example gpt-4o-vs-4o-miniprompts:

- file://prompt1.txt

- file://prompt2.txt

# Set the LLMs we want to test

providers:

- openai:o4-mini

- openai:gpt-4.1

A simple npx promptfoo@latest eval will run the example. Also note that you can override parameters directly from the command line. For example, this command:

Produces this HTML table:

Full setup and output here.

A similar approach can be used to run other model comparisons. For example, you can:

examples/ directory of our Github repository.The above examples create a table of outputs that can be manually reviewed. By setting up assertions, you can automatically grade outputs on a pass/fail basis.

For more information on automatically assessing outputs, see Assertions & Metrics.

Press p or to see the previous file or, n or to see the next file

Browsing data directories saved to S3 is possible with DAGsHub. Let's configure your repository to easily display your data in the context of any commit!

promptfoo is now integrated with AWS S3!

Are you sure you want to delete this access key?

Browsing data directories saved to Google Cloud Storage is possible with DAGsHub. Let's configure your repository to easily display your data in the context of any commit!

promptfoo is now integrated with Google Cloud Storage!

Are you sure you want to delete this access key?

Browsing data directories saved to Azure Cloud Storage is possible with DAGsHub. Let's configure your repository to easily display your data in the context of any commit!

promptfoo is now integrated with Azure Cloud Storage!

Are you sure you want to delete this access key?

Browsing data directories saved to S3 compatible storage is possible with DAGsHub. Let's configure your repository to easily display your data in the context of any commit!

promptfoo is now integrated with your S3 compatible storage!

Are you sure you want to delete this access key?